Disruptive new materials - thanks to computing power and quantum theory

The History of Energy Technology – The discovery of the nanoworlds enables a renewable energy supply for all (18)

The creation of new materials that offer unprecedented energy and material savings is accelerating at a rate that is barely noticeable to the general public. The huge increase in computing power is beginning to fundamentally change the way we work with materials: The potential of quantum theory can finally be exploited in a very practical way across the whole spectrum of materials science. For the last half-century, both the cost and the energy required for a unit of computing power have fallen by a factor of about twenty every decade. The history of recent materials science shows that this is the trigger for a wave of innovation that may drastically reduce the energy and material intensity of our civilisation.

The last episode of this series covered the history of materials sciences up to about 1970, when the Cold War subsided. With new questions, new methods and increasing computing power, the pace of development accelerated. After the end of the Cold War in 1990, the initially mechanistic vision of nanotechnology mobilised new resources. Since the turn of the millennium, the possibility of solving quantum theoretical equations for more complex systems has led to rapid progress and completely new materials. The example of battery development shows how the development of completely new types of materials opens up unexpected new horizons.

End of the post-war period: Internationalisation of materials science with civil issues

Around 1970, social and economic-industrial issues began to take precedence over military concerns. The Vietnam War led to international protests, the growth model of Fordist mass production ran into difficulties, striking workers, protesting students and the beginnings of the environmental movement demanded other priorities. The Cold War became less important, declining economic growth, environmental problems and then the oil crisis led to smaller military programmes and the search for answers to new questions.

My article series on the history of energy technology:

The discovery of nanoworlds enables a renewable

energy supply for allThe episodes so far:

Nuclear fission: early, seductive fruit of a scientific revolution

Where sensory experience fails: New methods allow the discovery

of nano-worldsSilicon-based virtual worlds: nanosciences revolutionise information technology

Climate science reveals: collective threat requires disruptive overhaul of the energy system

The history of fossil energy, the basis of industrialisation

The climate crisis is challenging the industrial civilisation: what options do we have?

Nanoscience has made electricity directly from sunlight unbeatably cheap

Photovoltaics: Increasing cost efficiency through dematerialisation

50 years of restructuring the electricity system — From central control to network cooperation

Emancipation from mechanics — the long road to modern power electronics

Power electronics turns electricity into a flexible universal energy

During the years of reconstruction and the Cold War, technological progress was largely uncritically supported. There was also little scepticism about arms research. But nuclear weapons had demonstrated for the first time the potential of technology to destroy humanity. And the acquiescence of scientists who had worked for the Nazi regime and the Holocaust had destroyed the myth of pure science. From the 1970s onwards, the younger generation in particular increasingly demanded social responsibility in the development of science and technology. The peace, anti-nuclear and environmental movements gained increasing influence in the public debate.

In this new situation, the materials sciences that had emerged in the US also had to reposition themselves. In addition to metals and semiconductors, ceramics, plastics and glass increasingly came to the fore. Physics receded somewhat into the background, while chemistry and engineering became more important. The name of the discipline and the laboratories changed from "Material Sciences" (MS) to "Material Science and Engineering" (MSE). As a result, in 1972, responsibility for the materials science laboratories was transferred from the Pentagon-run Advanced Research Projects Agency (ARPA) to the National Science Foundation (NSF), an independent federal agency.

As we saw in the last part of this series, the emergence of government-led, primarily military, materials science was initially mainly an American development. As civilian concerns gained the upper hand, it became independent and internationalised. In Western Europe, the focus after the Second World War was primarily on basic research. First military, then civilian nuclear technology was developed in large research centres. It was only later that more intensive efforts were made in applied research. The internationalisation of interdisciplinary materials science since 1970 has been driven by the increasing number of civil applications and new classes of materials, as well as the international spread of new nanoscience research methods.

New research methods: the example of microscopy

The development of microscopy illustrates the progress made in research methods. Conventional multi-lens light microscopes, which were used to discover microorganisms in the 17th century, can only see objects larger than 250 nm (nanometres = one-millionth of a millimetre) because of the wavelength of the light. Today's sophisticated, digitised light microscopes are no different, but they can automatically count or measure three-dimensionally.

In 1926, after quantum theory had shown that electrons could also be seen as waves, it became possible to construct "electron lenses" using magnetic fields. In 1931, Ernst Ruska and Max Knoll at Siemens built the first electron microscope, which used electron beams instead of light beams to shine through thin samples. It was soon in widespread use. In 1937, Manfred von Ardenne (1907-1997) built the first scanning electron microscope, which scanned opaque samples with a thin electron beam. However, the electronics of the time made it difficult to combine and image the signals from different detectors. It was not until 1965 that commercial electron microscopes became available — they are still relatively expensive today. They achieve a resolution of up to 1 nm — three times the diameter of an iron atom. Spectroscopic examination of the secondary radiation allows special scanning electron microscopes to identify the elements locally present in the sample.

The next great leap forward in microscopy came in 1981: Gerd Binnig (*1947) and Heinrich Rohrer (1933-2013) invented the scanning tunnelling microscope, for which they were awarded the Nobel Prize in 1984. According to classical physics, a current can only flow when a voltage is applied if there is a conductive connection. According to the laws of quantum mechanics, however, an electron can "tunnel" through very small distances with a certain probability thanks to its wave nature. The scanning tunnelling microscope makes use of this effect: surfaces are scanned without contact using a fine tip, measuring the "tunnel current", which depends on the applied voltage, the distance and the atomic composition of the surface. This makes it possible to "see" individual atoms, as the resolution is 0.1 nm. The devices are now inexpensive and fit on a laboratory bench.

This was the breakthrough for single-atom analysis, albeit only on surfaces. Further scanning microscopes were developed, including the atomic force microscope (AFM) in 1985, which measures the atomic forces between the tip and the surface. Today, even individual atoms can be moved with the fine tips. Increasingly powerful electronics and computing, especially after the introduction of the microprocessor in 1971 have played a major role in these developments.

As the Cold War ends, looking at the world is changing

When the Cold War came to an end around 1990 with the demise of the Warsaw Pact and then the Soviet Union, the world was already a very different place than it had been in 1970 — politically, socially and technologically. After the end of the East-West confrontation, people hoped for peaceful development and debated intensively what role new technologies should play in the future global society in the face of growing environmental problems.

Microprocessors already had around 1 million transistors and clock speeds of 25 MHz. Personal computers (PCs) were on every scientist's desk, and most measuring instruments had greatly increased their power and manageability with processors and screens. New methods were added. The large-scale equipment that had been so prized during the Cold War — huge particle accelerators, built primarily for the spectacular hunt for elementary particles and increasingly used for materials analysis — was becoming less important. Instead, small and medium-sized machines took over the laboratories, and decentralised research in smaller teams became efficient. This also corresponded to the structural change in the global economy: International corporations replaced centralised structures with global networks or split into several parts, and central laboratories were replaced by international cooperation between regional research centres and with suppliers.

Coming from different disciplines, materials scientists increasingly developed a common understanding of how atomic structures and processes relate to the macroscopic properties of matter. Shared methods and instrumentation created links, common terminology and similar interpretations. Researchers' interests increasingly converged on a common spatial scale between 0.1 and 100 nanometres.

But what was their overarching mission? With the end of the Cold War, the military justification for generous public research funding, especially in the US, had become difficult. Advertising new technologies in everyday life by announcing that they were a product of space travel — from pan coatings to cordless drills to mattress materials — also lost its appeal; the moon landing was a good two decades ago. As the tasks of materials science fragmented according to the interests of the industries involved, public funding became less and less justifiable. Researchers, science managers and technology policymakers realised that a new impetus for government funding required a compelling vision that could be communicated to the public.

Nanotechnology — a mechanistic vision mobilises new resources

Inspired by advances in biochemistry, Eric Drexler (*1955) of the Space Systems Laboratory at MIT (Massachusetts Institute of Technology) had been promoting "molecular engineering" since the early 1980s. He soon became enthusiastic about the "coming era of nanotechnology", in which new structures could be created atom by atom with "nanomachines", combined into "universal assemblers". "With assemblers we will be able to remake our world or destroy it".

He was referring to a famous lecture given in 1959 by the legendary physicist Richard Feynman (1918-1988), who had won the Nobel Prize in 1965 for his work on quantum electrodynamics and was famous for his vivid presentations. "There's Plenty of Room at the Bottom" was the title of Feynman's visionary lecture to the American Physical Society. Using extremely small biological systems that exploit physical principles at the molecular level, he asked, "What would happen if we could arrange the atoms one by one the way we want them?" and imagined how powerful computers that process information at the atomic level, or medical repair machines of this size, could be. Today, 65 years later, it is astonishing how far-sighted Feynman was even then, and how much he still formulated his ideas in mechanical terms, thinking, for example, about the lubrication of bearings. Above all, Drexler took up the idea of nanoscale mechanics: "To have any hope of understanding our future, we must understand the consequences of assemblers, disassemblers and nanocomputers".

Drexler remained an ardent promoter of nanotechnology for decades, but not all materials scientists shared his vision. More reserved was Mihail C. Roco, a mechanical engineer who obtained his doctorate in Bucharest in 1976, held professorships in the US, the Netherlands and Japan, and joined the NSF (National Science Foundation) in 1990. There, he became enthusiastic about nanotechnology and soon persistently pursued the development of an ambitious inter-agency programme for nanotechnology research.

American policymakers were convinced that with the end of the Cold War, economic and technological competition would replace the arms race, and that the US would have to reposition itself for this. In 1994, President Bill Clinton and Vice President Al Gore declared investment in science to be a "top priority for building the America of tomorrow" in "Science in the National Interest", the first US presidential strategy paper on research policy in 15 years. At the time, Japan in particular was seen as a rival. Its growing economic power was increasingly perceived as a threat in the US.

In 1991, Japan launched a US$225 million nanotechnology funding programme. Europe also had nanotechnology research programmes. Between 1996 and 2001, about 90,000 nano-related scientific publications appeared worldwide (13% materials science, 10% applied physics, 10% physical chemistry, 8% solid state physics, 6% general chemistry, with materials science and chemistry showing the highest growth rates). 40% of the world's nano-related publications came from the EU, 26% from the USA and 13% from Japan.

In 1985, a British-American team discovered fullerenes — closed hollow bodies of 60 or more carbon atoms formed in geometric regularity from pentagons and hexagons — for which the scientists were awarded the Nobel Prize in Chemistry in 1995. After it was demonstrated in 1990 that it was possible to produce fullerenes in large quantities, a wave of enthusiasm for the new substance broke out. On the one hand, it was hoped that it would open up new horizons for chemistry, like the discovery of benzene at the end of the 19th century (see episode 16). On the other hand, its unusual physical properties also promised new applications beyond chemistry. By the mid-1990s, however, disillusionment had set in. A variant of fullerenes that is becoming increasingly important today are nanotubes, hollow tubes made of carbon hexagons that exhibit extreme strength. It is only now that they can be fully described by quantum theory that their potential is beginning to be realised.

In this environment, Roco skilfully forged a broad coalition of supporters for a National Nanotechnology Initiative (NNI). In doing so, he drew on Drexler's vivid vision. From the outset, the programme was conceived as an innovative, multi-agency strategy that would involve a wide range of universities, institutions and companies, inspire young researchers to enter the sciences and trigger a multifaceted innovation drive. Apart from doubts about this organisational approach, critics warned above all about the unresolved consequences of nanotechnologies. Prominent sceptics even feared that autonomous, self-propagating nano-assemblers could become a danger to humanity. In autumn 2000, the US Congress approved $465 million for the NNI.

The programme has grown considerably over the years: in 2010, the budget was already 1.9 billion; for 2024, President Biden has requested 2.16 billion. As the name suggests, the field of research is rather technology-oriented and has been repeatedly redefined as a funding programme. Nanotechnology is not a scientific discipline, but a term that repeatedly refers to the nano dimension and applicability across the sciences. The NNI simply says: “Nanotechnology is the understanding and control of matter at the nanoscale, at dimensions between approximately 1 and 100 nanometers, where unique phenomena enable novel applications”. In 2015, six times as many scientific articles on nanotechnology topics were published worldwide as in 2000, while the proportion of Chinese publications rose from 10% to 36%.

Customised membranes reduce industrial energy consumption

Materials scientists around the world have taken advantage of nanotechnology funding programmes and expanded their institutions. But nanotechnology and materials science are not the same. It is now almost impossible to keep track of where materials science discoveries, made possible by quantum mechanics and new computing methods, are playing a central role. New materials with astonishing properties, unimaginable just two decades ago, are appearing in all kinds of industries.

Electronics — which now underpins software and artificial intelligence, and enables a whole new level of information processing and communication — was just the beginning. In previous episodes, we have seen how new materials sciences are enabling photovoltaics and power electronics; in a moment, we will look at batteries. But the potential is far from exhausted.

One example is membranes: we saw in the penultimate episode how selectively permeable barriers enabled major advances in the first batteries. Specialised membranes play an important role in biology. Thanks to detailed quantum-mechanical modelling, it is now possible to make membranes that are permeable to specific molecules and ions but not to others, depending not only on size but also on other physical and chemical properties. This means that a revolution is now underway in many industries, especially energy-intensive ones such as chemical production. Separation accounts for about 15 percent of global energy consumption and about 75 percent of the cost of raw materials and chemicals.

The most important method of separating chemical substances to date is distillation: a mixture of substances is heated to such an extent that one substance vaporises while another remains liquid. The expelled gaseous part becomes liquid again after cooling (which often requires large quantities of cooling water). A multi-stage process is usually required to achieve a high degree of purity. Schnapps has always been distilled in this way. Chemical plants and refineries consist largely of large distillation columns that separate the still-mixed reaction products of chemical reactions into usable products. Desalination plants, which produce drinking water from seawater, are also still largely based on this energy-intensive principle.

With the possibility of developing membranes that allow one substance to pass but not another, it will be interesting to replace distillation towers, at least in part, with membranes. Heating and cooling are largely eliminated. The increased energy consumption of the pumps is usually much lower. Energy savings vary depending on the task and the suitability of the membrane. For the US, researchers estimate that of the 32 percent of total industrial energy consumption, about half is used for separation processes, and about half of that — 8 percent of total energy consumption — is used for distillation processes, 90 percent of which could be saved by membrane-based separation processes (data from 2015). Thermal drying and evaporation processes account for another thirty percent of industrial energy consumption and can also be made much more energy efficient with membranes. Water consumption can also be drastically reduced by saving on cooling water and by filtering and recycling wastewater. In addition, the separation process can be fully electrified.

For many processes, the search for suitable membranes is only just beginning, and even where membrane technologies are available, the transition is only just beginning. Quantifying progress and potential is not easy — the chemical associations believe that many technologies and their use are not disclosed.

Material sciences are revolutionising our relationship with the material world

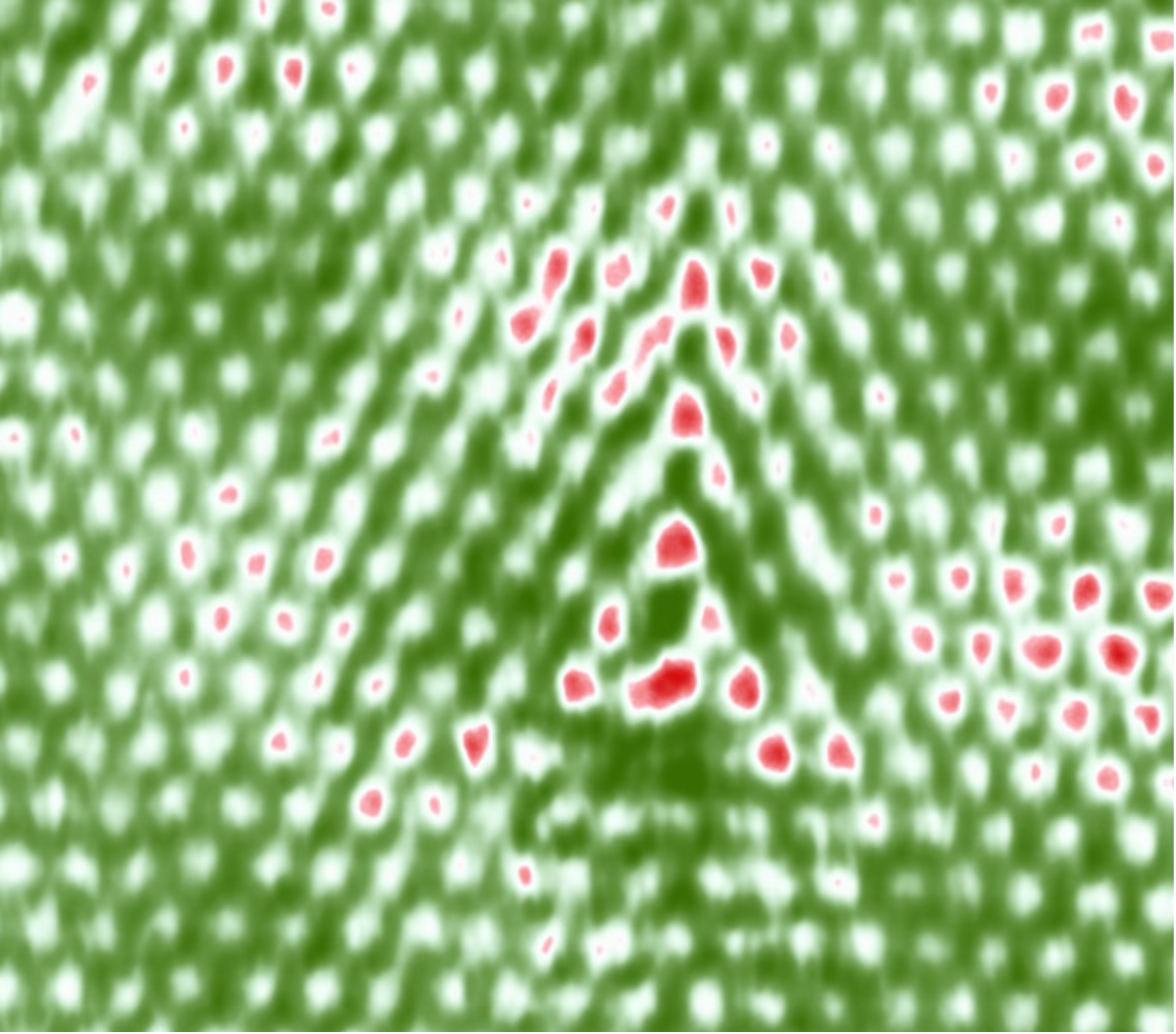

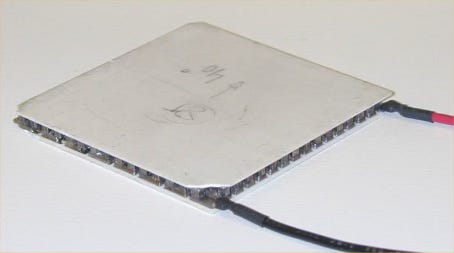

Another example of the incipient revolution brought about by the new materials sciences is the recent progress in the design of thermoelectric elements. As early as the 19th century, thermoelectric effects were discovered that make it possible either to use temperature differences to generate electrical energy (Seebeck effect, thermoelectric generator) or, conversely, to use electricity to create temperature differences for cooling or heating (Peltier effect, solid-state heat pump). Such thermoelectric elements are already widely used for special applications (e.g. as temperature sensors, for camping refrigerators or for cyclic heating and cooling in laboratory equipment).

They would be an ideal low-maintenance device for waste heat recovery or as a heat pump/cooling unit suitable for mass application in the new energy world — if efficiency were not so low. For example, the efficiency of thermoelectric chillers is a quarter of that of compression chillers. This is mainly due to the fact that optimal thermal insulation between the hot and cold sides and optimal electrical properties are at odds with each other in the materials used to date. In recent years, however, significant progress has been made with new exotic materials. Recently, a breakthrough seems to have been achieved by a broad search of databases containing a large number of quantum-theoretically calculated compounds. Several promising candidate materials have been identified. In the coming years, thermoelectric elements are expected to be used on a larger scale for the conversion of heat into electricity, which has so far been of little use. For example, work is underway to significantly increase the efficiency of solar cells by harnessing the unavoidable heat generated by solar radiation that cannot be captured by the photovoltaic effect.

Other examples of materials science innovation are less spectacular but have a wider impact:

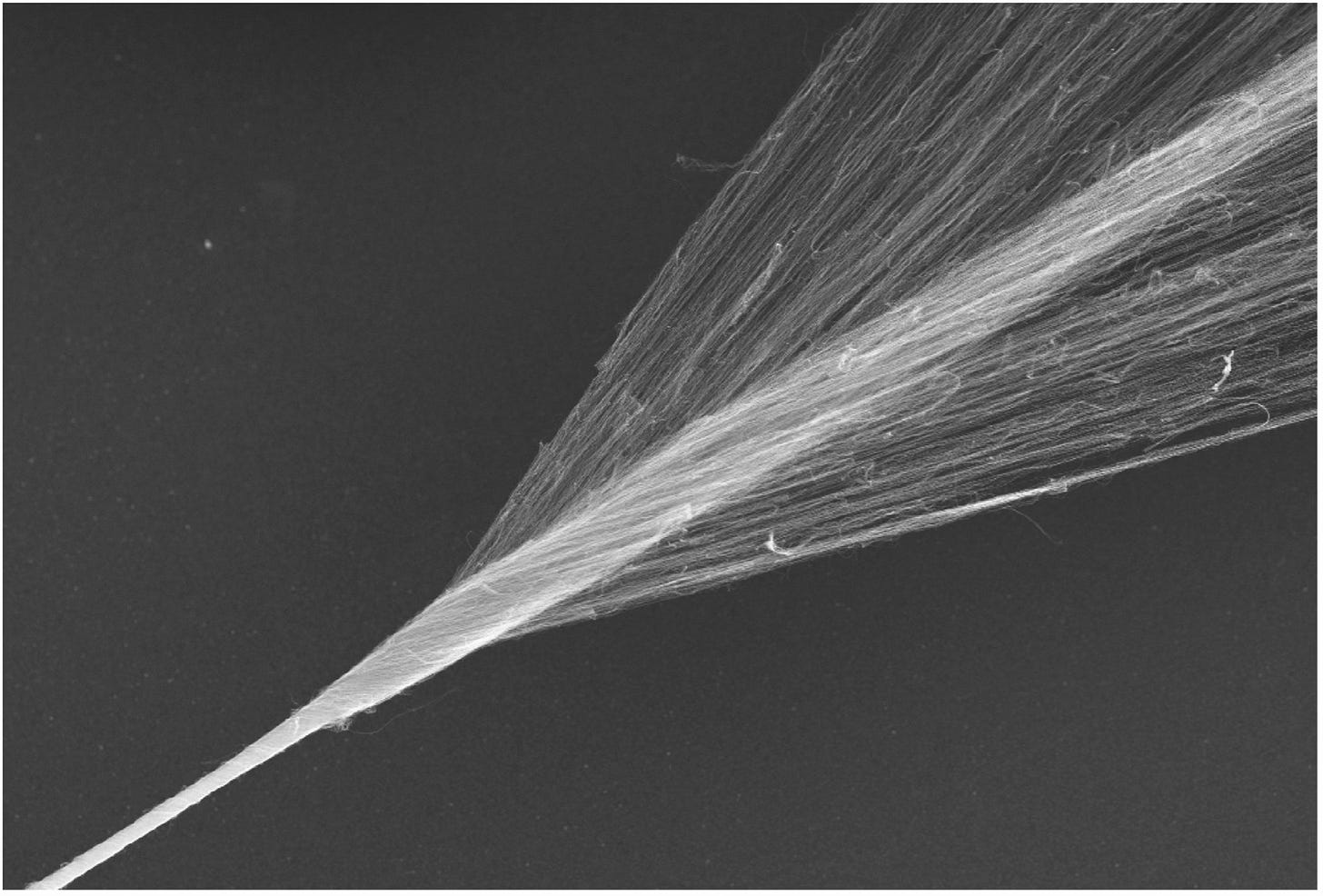

Carbon fibres are becoming much stronger thanks to better defect detection, new production techniques and the addition of nanotubes or graphene.

Quantum theoretical modelling is helping to develop new materials for stronger permanent magnets that are no longer dependent on rare elements — increasingly important for high-efficiency, lightweight electric motors.

Nanoporous aerogels are highly effective insulating materials that are becoming cheaper to produce thanks to new materials and processes, and are therefore increasingly used not only in manufacturing but also in construction.

A combination of artificial intelligence and quantum mechanics is increasingly helping in the search for new materials: To date, researchers have identified around 48,000 different crystals. Google DeepMind recently found an additional 2.2 million possible crystals. In just a few months, researchers were able to synthesise 700 of them.

As a result, nanoscience not only enables completely new ways of processing and communicating information, or understanding and manipulating biological systems, including completely new ways of producing food. Materials science also opens up new possibilities for optimising the mechanical, electrical, thermal and optical properties of industrially used materials. Our relationship with the material world is changing rapidly.

On the one hand, this opens up completely new applications, and on the other, it can make it possible to achieve the same result with significantly less material and energy. Information can replace material and energy. The importance of research compared to physical production is increasing. But whether consumption will fall as efficiency rises depends on whether people use the new opportunities wisely. This is urgently needed: Earth Overshoot Day in 2024 is already 1 August: on that day, humanity will have used as many renewable resources as the Earth can produce in a whole year.

Novel batteries thanks to quantum theory in materials science

Robert A. Huggins and Carl Wagner were influential co-founders of modern materials science. With their work on the movement of ions in solids in the 1960s, they laid essential foundations for the later development of the lithium-ion battery.

Metallurgist Robert A. Huggins (*1929) founded the Department of Materials Science and Engineering and then the Centre for Materials Research at Stanford University in 1959. He was a co-founder of materials science journals and societies, and for a time was director of the Materials Science Division at ARPA. He later described a sabbatical with Carl Wagner at the Max Planck Institute for Biophysical Chemistry in Göttingen in 1965/66 as a crucial stimulus for his later work on lithium-ion batteries in the US and Germany.

Carl Wagner (1901-1977) was one of the founders of solid chemistry. Early on, he was an extraordinarily productive academic researcher at various German universities. In 1933 he became a member of the Nazi organisation SA. He later made important contributions to the development of German rocket weapons. After 1945, he evaded denazification by going to the US as a member of Wernher von Braun's team, where he worked on rocket fuels and became a professor of metallurgy at MIT. In 1958 he returned to Germany to become director of the Max Planck Institute in Göttingen, succeeding Karl-Friedrich Bonhoeffer, a five-time Nobel Prize nominated physical chemist with a very different past: he came from a family that had been active in the resistance against the Nazis, had given up hydrogen research to avoid being involved in the development of a German atomic bomb like his friend Werner Heisenberg, and had probably provided information to the British Secret Service.

As early as 1841, it was discovered that sulphuric acid could be incorporated into graphite and expelled again, significantly altering the electrical, mechanical and optical properties of graphite. The foreign molecules were apparently deposited inside the graphite crystals. This was called intercalation. Over the decades, more such intercalations were discovered, but they could not be explained or predicted. It was only with new insights into the movement of the intercalated ions (charged molecules) that the mechanisms and effects began to be better understood. It soon became clear that this could be very interesting for the construction of more efficient batteries: If electrochemical charge carriers could be stored not only on the surface of electrodes, but also inside them, this promised a much higher capacity. Intercalation became the key to a whole new generation of batteries.

However, it was still a long way to go, involving many different specialists. It was not until 2019 that John B. Goodenough, M. Stanley Whittingham and Akira Yoshino were awarded the Nobel Prize in Chemistry for their decisive contributions to the invention of the lithium-ion battery.

In 1976, the chemist Whittingham (*1941) built the first lithium-ion battery at the oil company Exxon. It was based on the intercalation of lithium ions in titanium disulfide. At the time, the oil industry was keen to develop alternatives to crude oil, which it feared would soon run out. Whittingham emphasised the fundamental importance of intercalation for the new generation of batteries — far beyond lithium-ion. "It's like putting jam in a sandwich," he later said in an interview, "in chemical terms it means you have a crystal structure and we can put lithium ions in and take them out and the structure is exactly the same afterwards.”

In 1980, at Oxford University, the materials scientist Goodenough (1922-2023) succeeded in doubling the performance of lithium-ion batteries by using a different cathode material. He relied heavily on detailed quantum theoretical modelling of the interaction between lithium ions and host crystals. His patent was licensed by the UK Atomic Energy Research Establishment to Sony and other Japanese companies. Before this, Goodenough's work at MIT on the magnetic properties of metal oxides had made a significant contribution to the development of RAM (random access memory), which is still a central component of computers today. In 1970, he became interested in renewable energy to end the world's dependence on oil. Solar energy requires suitable energy storage systems. He wanted to help develop them. Not allowed to work on this in his Air Force-funded lab at MIT, he eventually went to Oxford. Early on, he practised persistence and determination, overcoming his dyslexia — about which little was known at the time — to go from being a poor schoolboy to becoming a brilliant mathematician and physicist. He continued his research into new types of batteries into his old age, dying in 2023 at the age of over a hundred.

Finally, in 1985, Japanese chemist Akira Yoshino (*1948) at Kawasaki improved the safety of the new type of battery by avoiding pure lithium, making it practical for use in consumer products. In 1991, Sony and Asahi Kasei began commercial sales of rechargeable lithium-ion batteries.

It was quantum theory that made it possible over the years to understand the movement and storage of ions in crystal lattices in a way that allowed intercalation to be used for batteries, and then to find particularly powerful material combinations. This momentous innovation would not have been possible without the new materials sciences, which bring together different disciplines.

The next part of this series will look at the triumph of the lithium-ion battery, new types of batteries and the far-reaching changes made possible by low-cost electricity storage.