The Rise of Modern Materials Sciences

The History of Energy Technology – The discovery of the nanoworlds enables a renewable energy supply for all (17)

Translation from the German original by Dr Wolfgang Hager

The development of materials science has accompanied us throughout this series. But to really understand the fundamental importance of new batteries and other new technologies, it is necessary to delve a little deeper into this history.

At the beginning of the twentieth century, new physical discoveries about the nature of matter shook the scientific worldview: the phenomena of our everyday world turned out to be the statistical result of strange processes in a world of atomic nuclei, atomic shells and their compounds. Energy and matter proved to be different aspects of the same thing.

Initially, the focus of interest was on the atomic nuclei, the enormous forces ruling there and the even smaller particles they are made of: With atomic bombs of unimaginable destructive force, the great powers armed themselves for "mutually assured destruction". By taming the nuclear forces in spectacular power plants, they hoped to solve all energy problems, and with huge particle accelerators, to fathom the last remaining secrets of nature.

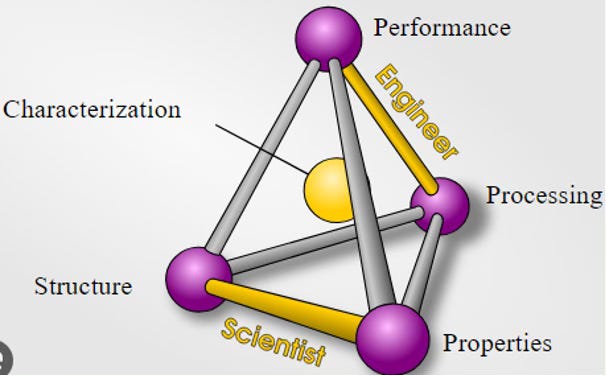

Much more significant for our world, however, was how the atomic shells consisting of electrons can connect with each other: Understanding this more accurately turned out to be the key to explaining the bewildering diversity of our world, and to the discovery of entirely new possibilities. Alongside the classical natural sciences, there arose a hybrid material science that deals with complex, large atomic compounds. This opened up totally new perspectives for technical systems in the nanoworlds — especially for systems allowing a much more sustainable use of energy.

In the last instalment we saw how, starting with the development of classical batteries, electro-chemistry and chemistry in general made huge progress in the nineteenth century. It became increasingly possible to ask the question of how the macroscopic behaviour of certain substances could be explained by the properties of their smallest particles. Following this, we will now look at the development of materials sciences based on quantum theory — in more detail than in previous episodes and this time from the perspective of battery development. We already encountered the key role of the new sciences of solid materials in the development of photovoltaics, microelectronics and power electronics. The development of totally new types of batteries starting in the 1980s relied on these discoveries in materials sciences but required further breakthroughs.

My article series on the history of energy technology:

The discovery of nanoworlds enables a renewable

energy supply for allThe episodes so far:

Nuclear fission: early, seductive fruit of a scientific revolution

Where sensory experience fails: New methods allow the discovery

of nano-worldsSilicon-based virtual worlds: nanosciences revolutionise information technology

Climate science reveals: collective threat requires disruptive overhaul of the energy system

The history of fossil energy, the basis of industrialisation

The climate crisis is challenging the industrial civilisation: what options do we have?

Nanoscience has made electricity directly from sunlight unbeatably cheap

Photovoltaics: Increasing cost efficiency through dematerialisation

50 years of restructuring the electricity system — From central control to network cooperation

Emancipation from mechanics — the long road to modern power electronics

Power electronics turns electricity into a flexible universal energy

This instalment will deal with the discovery of the quantum structure of matter, the development of quantum chemistry and the development of materials science up to around 1970. The following instalment will then shed light on how the conditions thus created, together with advances in computer technology and changing social requirements, were able to make quantum theory useful for materials science not only in general conceptual terms but also in practical terms, allowing the development of completely new materials and processes. Only then can we see the huge step which lithium-ion batteries represent and the developments we can still expect on this basis.

Electricity and Matter: Quantum theory reveals the structure of atoms

As described in the last instalment, chemistry at the end of the nineteenth century was rapidly gaining a wealth of experience in chemical measurement results and methods. With the periodic table of elements it had acquired a powerful tool that allowed the creation of many new substances and the development of an increasingly powerful industry. However, it was not yet understood why chemical bonds are formed. It was assumed that atoms combine to form molecules, but it was unclear what the atoms themselves were.

It was known from electrochemistry that many chemical bonds had something to do with electrical charges. Maxwell had brought classical electrodynamics to its completion with his equations in 1864. However, this did not initially have any consequences for the world of atoms and molecules. Towards the end of the century, however, increasingly perplexing observations were made regarding the interactions between electromagnetic radiation — which also includes visible light — and matter.

Experiments with radiation and X-rays led to a more precise understanding of atoms: in 1897, Joseph J. Thomson proved that negatively charged electrons exist as independent elementary particles. Ernest Rutherford's scattering experiments showed that most of the mass of atoms is concentrated in a small nucleus. In 1911, he developed the planetary atom model, in which electrons orbit around a small heavy atomic nucleus. Thomson had still assumed that electrons are embedded like raisins in a kind of positively charged pudding and can be detached if necessary. The fact that apparently solid matter consisted mainly of empty spaces was rather disconcerting — the radius of the nucleus is only about one hundred thousandth of the radius of the corresponding atom. But at least it was still possible to imagine this mechanically. But there was a discrepancy: according to Maxwell's laws of electrodynamics, rotating charges should lead to a radiation of energy and to a collapse of the atoms.

As early as 1900, however, Max Planck, while studying the spectral lines of thermal radiation, came to the conclusion "in an act of despair" that the measurement results could only be described mathematically by postulating that the radiation energy could merely assume values that were multiples of a "quantum of action". The explosive nature of this result only became clear in 1905, when Einstein, to explain the photoelectric effect, put forward the hypothesis that light and electromagnetic radiation in general could also be regarded as a stream of particles. In doing so, he left the foundations of classical electrodynamics. Only thirty years earlier, Maxwell with his famous equations had found the final mathematical representation of this theory and seemingly ended the long-simmering dispute as to whether light consisted of particles or waves — in favour of waves. Einstein also showed that the then still inexplicable irregular movement of tiny particles observed under a microscope in liquids was indeed experimental proof of the existence of atoms. Until then, many scientists considered atoms to be a useful concept rather than a reality. With these findings (and the special theory of relativity presented in the same year), Planck and Einstein had clearly left classical physics behind. Light seemed to be both a particle and a wave at the same time. That was unnerving for everyone involved.

Eight years later, Niels Bohr and his team in Copenhagen succeeded in explaining the then-known properties of the hydrogen atom by assuming in his atomic model that electrons — in analogy to Planck-Einstein's quantization of electromagnetic radiation — can also only assume certain energy states and orbit around the atomic nucleus in corresponding, clearly defined orbits. In the emission and absorption of light, the observed wavelengths (spectral lines) specific to each atom were attributed to the distinct energy levels of the electron orbits.

The next decisive step was Louis de Broglie's theory of matter waves in 1924: he proposed the theory that not only massless photons (light particles) can be represented as waves, but also electrons with mass. This made it clear that only certain electron orbits were possible on which the waves did not cancel each other out through superposition. However, this theory did not yet allow the observed spectra of atoms to be calculated — it was the last of the "older" quantum theories.

The foundations of "quantum mechanics", which differed fundamentally from classical mechanics but included it as a borderline case, were subsequently developed between 1925 and 1928 in a close collaboration between a European network of physicists. This network, which was centred in Munich, Göttingen and Copenhagen, included above all Werner Heisenberg, Erwin Schrödinger, Max Born, Pascual Jordan, Wolfgang Pauli, Niels Bohr and Paul Dirac — all of whom except Jordan were awarded the Nobel Prize in Physics in the following years.

With the principle of using only observable quantities and with new mathematical methods, Heisenberg, Born and Jordan first formulated matrix mechanics in 1925, and Schrödinger then formulated the equivalent but more elegant Schrödinger equation in 1926. In 1927, the "Copenhagen interpretation" of quantum mechanics, which is still valid today, was formulated. Among other things, as proposed by Born in 1926, it includes the interpretation of the wave function as the probability of the location of a particle. This view led to intensive discussions. Einstein could not accept it for a long time ("God does not play dice"). Finally, in 1928, Paul Dirac succeeded in extending the Schrödinger equation in such a way that it was also compatible with Einstein's special theory of relativity (Dirac equation) and was thus able to explain important phenomena in the behaviour of electrons and the subtleties of hydrogen spectra.

This laid the complete foundation for quantum sciences. The only problem was that the sophisticated equations could only be solved with simplifying assumptions for very specific cases given the tools available at the time.

In the shadow of nuclear physics: quantum chemistry solves the mystery of chemical bonds

In a quick recap, we have again sketched the rise of quantum theory, as in the first instalment of our series, but from a chemical angle. In those early years, it caused quite a stir, particularly because of its profound philosophical implications: The yawning emptiness of subatomic spaces, the wave-particle duality, the impossibility of precisely determining the position and momentum of particles simultaneously, the dissolution of the causalities of our macro-world into statistical results of coincidences in the nano-range... all of this contradicted human experience in the macro-world, caused uncertainty with implications which seemed impossible to grasp.

Scientists quickly wanted to advance further to the smallest structures and understand the nature of the atomic nucleus. Especially against the backdrop of the looming and then incipient Second World War, this led to a fascination with the hitherto unimaginable forces at work in the very core of the atom — with nuclear physics, the atomic bomb and the attempt to solve mankind's energy problems with nuclear fission and fusion. The bomb, nuclear energy, the exploration of ever-stranger particles and the attempt to find a formula that could explain all physical phenomena in a unified theory shaped the public image of modern physics.

However, much less attention was initially paid to the consequences of the new physics for the atomic shell (consisting of electrons), for the bonds between atoms, for the empirical sciences of crystals and metals, and chemistry. The forces and energies at work here were far less spectacular.

For the hydrogen atom, which has only one electron, the Schrödinger equation can be solved exactly (analytically): In quantum theory, the descriptive orbits in which the electrons circle around the atomic nucleus in Rutherford's atomic model have become so-called orbitals, which are interpreted as location probabilities. The orbitals are distinguished by four quantum numbers and can thus be assigned to the subshells and shells of Bohr's atomic model. Because multiple occupations are excluded, the electrons systematically fill the shells of larger atoms - even if the exact results for the hydrogen atom are no longer entirely correct for larger atoms. Nevertheless, the order in shells qualitatively explains the properties of the periodic system of elements, which means that it is primarily the electrons of the outermost shell (valence electrons) that are involved in chemical bonds.

The difficulty, and also the unattractive aspect of applying quantum theory to chemical bonds, was that although the Schrödinger equation provided good results for the hydrogen atom and quite useful results for larger atoms, it could not be solved for several atoms using mathematical-analytical methods. For this, simplifying assumptions had to be made.

Walter Heitler and Fritz London in Zurich made a start in 1927 by using quantum mechanical methods to investigate the hydrogen molecule: the simplest conceivable molecule with two atomic nuclei and two electrons. Their approach was expanded by the American chemists John Slater and Linus Pauling into the Valence-Bond method.

Linus Pauling, who had spent two years with the pioneers of quantum theory in Europe, showed in 1931 that also in larger organic molecules several atomic orbitals hybridize to form molecular orbitals. The alternating single and double bonds in Kekulé's benzene ring turned out to be a uniform ring. With his influential book "The Nature of the Chemical Bond and the Structure of Molecules and Crystals", Pauling is regarded as the actual founder of quantum chemistry. An extraordinarily productive researcher in several fields, he was awarded the Nobel Prize for Chemistry in 1954 for his work on chemical bonding and the Nobel Peace Prize in 1962 for his engagement against nuclear testing.

While the Valence Bond (VB) method concentrates on bonds between two atoms through electron pairs, even in dealing with larger molecules, and is therefore quite close to traditional ideas of chemical bonding, the Molecular Orbital (MO) method, presented by Friedrich Hund and Robert Mulliken in 1929, is based on wave functions over the entire molecule. Both approaches are equivalent and produce identical results in the limiting case, but their different approximations make them more or less suitable depending on the problem at hand. Initially, the VB method was more popular, then the MO method gained importance, especially since ever greater computing power became available for the numerical solution of wave equations.

In fact, it took a long time for quantum theory to play an important role in chemistry. Because other methods, rules of thumb and classical theories of limited scope were sufficient for most purposes, the new theories were largely ignored, especially in organic chemistry. In the authoritative database for scientific publications in chemistry, "SciFinder", the number of annual publications with the keyword "quantum" only began to rise significantly in the 1960s: a good eight hundred in 1960, over six thousand in 1980 and almost sixty thousand in 2020. From 1965 onwards, the quantum mechanical explanation of previously puzzling phenomena in the spatial configuration of complex organic molecules (Woodward-Hoffmann rules), and then above all the rapidly growing possibilities of using computers to find numerical solutions to the wave equations, were decisive in bringing about this turnaround.

Computational chemistry — the term first appeared in 1970 — has today become an indispensable quantum theory-based toolbox for chemical research. This now includes not only numerical methods for solving wave equations and density functions, but also huge databases containing the empirical and theoretical knowledge of two hundred years of chemical research. These can be mined with the help of artificial intelligence. One of the success stories of recent months illustrates just how rapidly this is accelerating research: the Google software AlfaFold2, published in 2020, has already been able to calculate the spatial structure of larger proteins. AlfaFold3, which has been published this year, can calculate the structure of long RNA and DNA chains based on the sequence of amino acids, as well as estimate the interaction of all known bio-molecules — the associated database contains over 200 million structures. It is used extensively around the world, particularly for pharmaceutical research.

On the horizon, there is now also the possibility of dramatically accelerating quantum chemical calculations with the help of quantum computers. These are not only generally much faster than conventional supercomputers — their mode of operation also enables completely new calculation methods that are better adapted to the problems, especially for quantum theoretical problems.

From crafts to systematic materials analysis

Chemists are primarily concerned with the properties and transformations of gaseous and liquid substances — with how atoms combine to form molecules, thereby creating new substances with different properties. Solids are larger assemblies of atoms or molecules that are only partially accessible to the methods of chemistry: Crystals, crystal assemblies, non-crystalline agglomerations of molecules such as glass or plastic — structures that are larger and often more complicated than molecules. Explaining their properties using quantum theoretical methods is even more difficult than for molecules.

People have been working with materials ever since they started using tools. At first, they used materials that could be found: wood, stones, hides, skins, bones... Then they learned to produce more sophisticated materials from raw materials using chemical processes and heat treatment: Leather from hides, bricks from clay, glass from sand, ceramics from mixtures of minerals. Then they succeeded in producing metals and metal alloys from ores, some of which later gave their names to entire eras: Copper, gold, silver, tin, lead, bronze. Iron was produced in Europe from around 800 BC. With increasing experience, heating, mechanical processing (forging) and various additives made it possible to produce improved types of iron and steel. Metals have been the focus of interest in materials, especially since the beginning of industrialization.

It was not until the middle of the 19th century that the properties and chemical compositions of iron, steel, light metals (aluminium) and ceramics were systematically investigated. Macroscopic-empirical metallurgy played a central role in the emerging materials science. For the industrial production of steam boilers, reliable machines and ever-larger steel structures for bridges and high-rise buildings, it became essential to ensure the quality of materials and steel in particular by characterizing them according to uniform criteria. To this end, public materials testing institutes were founded in many countries — for example in Zurich in 1879 and Stuttgart in 1884. In addition to analyzing the chemical composition and investigating the mechanical properties (hardness, density, breaking strength, etc.), microscopic examinations of metal sections became increasingly important.

A breakthrough towards an atoms-based understanding of metals were Max von Laue's discovery in 1912 that crystals can diffract X-rays, and the subsequent identification of the structure of many simple crystals by William and Lawrence Braggs.

Research into the structure of crystals and into proofs of the existence of atoms have been closely connected since ancient times. Democritus (459 - 370 BC), the founder of atomism, wrote: "Only apparently does a thing have a colour, only apparently is it sweet or bitter; in reality, there are only atoms and empty space." He believed that atoms were too small to be perceived by the human senses. There were different views on the number of different atoms and their shapes. Apparently inspired by the shape of crystals, Plato assumed that atoms had the shape of the four simplest geometric solids, which could be constructed from triangles and squares. There was no further development of atomic theory until the Renaissance. In the Middle Ages, notions involving magic dominated. It was only from the middle of the sixteenth century that the ancient theory of atoms began to reassert itself and was then developed further in practice by the early chemists. With the Renaissance, the idea of atoms became widespread again. "It is as easy to count atomies as to resolve the propositions of a lover" wrote Shakespeare as early as 1599 in As You Like It.

The range of possible crystal structures is larger than one might think. For a long time, they eluded complete systematization. It was only after group theory had been developed in mathematics that Arthur Schoenflies and Yevgraf S. Fyodorov succeeded in 1890/91 in deriving all 230 possible space groups — spatial lattices created by mirroring, rotating and shifting elements. However, until von Laue proved experimentally by X-ray diffraction that crystals do indeed have a three-dimensional periodic structure, these were only theoretical assumptions.

Einstein's 1905 proof that matter consists of atoms, von Laue's analytical method of X-ray diffraction since 1912 and Dirac's completion of quantum theory in 1928 opened up completely new horizons for materials sciences. Once the properties of matter could conceivably be traced to atomic structures, it became imaginable to systematize the empirical experience of materials practitioners into a theoretically sound science.

However, there was still a long way to go. The practitioners working with macroscopic properties had established professional structures, disciplines, industries and methods. Quantum theoretical equations, on the other hand, could not yet be applied to the complex structures of many atoms. But new concepts began to change thinking, and new instruments (X-ray spectrometers and, from 1931, the electron microscope) began to change methodologies. In special cases, the theory already proved to be very useful (Theory of Semiconductors 1939, see Instalment 5; Theory of Superconductivity 1957, see Instalment 15).

Initially, however, completely different developments boosted materials research: the unforeseen shortages of materials experienced as the First World War dragged on longer than expected, caused the major powers to take more systematic precautions before the Second World War. And research promised new solutions: In Germany, the invention of ammonia synthesis (Haber-Bosch process) had led to a significant delay in defeat.

Governments stepped up materials research, both to improve the supply of materials and the ability to fall back on substitute materials in an emergency, and to develop more efficient materials and methods. In the USA, President Wilson founded the National Research Council in 1916 at the suggestion of the National Academy of Sciences. In Germany, the system of joint research by various institutions and industries was perfected, in which the Materials Testing Office (MPA, later BAM) played a central role, particularly for metals. The founding of the Notgemeinschaft der Deutschen Wissenschaften in 1926 (later the DFG) was also primarily based on the experiences of the First World War.

After Germany had focused on international exchange and exports in the interwar period, the National Socialists once again pursued a policy of self-sufficiency and rearmament from the early 1930s onwards, drawing on the experiences in the First World War. They developed an increasingly dense network of institutions for militarily motivated materials research, which constituted a new and efficient innovation system.

However, "Ersatz material research" showed that the historically evolved use of increasingly scarce materials, which had been designed for specific uses, could not be generally replaced by new materials: the requirements and loads on components were often not measured and systematically recorded, and materials were insufficiently characterized to enable systematic replacement. Extensive practical tests of individual components remained essential. The new scientific theories and methods were largely helpless when faced with real-world requirements.

The beginnings of modern materials science during the Cold War

After the German defeat in 1945, both the Soviet Union and the USA rushed to exploit the expertise of German materials and armaments research by removing equipment and deporting or recruiting scientists. From the Anglo-American side alone, up to 12,000 "investigators" were on the move in the Reich territory, preparing 4,000 reports on the possible wartime relevance of research activities. The statistics on deported/ recruited scientists are inconsistent. The best known is the rocket scientist Wernher von Braun: SS member and leading developer of the "Wunderwaffe" V2. More forced labourers died in its production than in its use. He was later responsible for the development of the Saturn rocket, with which the moon landing succeeded in 1969.

With the experience gained during the war, interest grew, particularly in the USA, in using the new scientific methods to determine the properties of metals and other materials more precisely and to create a theoretical basis for the development of new materials which went beyond the empirical, engineering approach.

The term materials science originated in the early 1950s in the USA and applied mainly to developments originating in metallurgy. The first Department of Materials Science was established at Northwestern University in 1959. Other universities followed suit. Metallurgists, ceramics specialists, physicists, chemists and biologists contributed their specialist knowledge. The new understanding of the structure and interaction of atoms, molecules and elementary particles made possible by quantum theory and new methods for analyzing structures and dynamics at the nano level, built bridges between previously separate fields of knowledge and established common methods. Industrial institutions also played a major role in the emergence of the new interdisciplinary approach: above all William Shockley's working group at Bell Laboratories, which invented the transistor and solar cells in 1947/48, and J.H. Hollomon's working group at the General Electric Laboratory, which succeeded in producing artificial diamonds, among other things.

The next important institutional step was suggested by John von Neumann (1903-1957) a polymath and highly influential member of the science community. Born into a Hungarian-Jewish family, the child prodigy — at the age of six he could divide eight-digit numbers in his head and converse in ancient Greek — had first completed a degree in chemical engineering in Zurich and shortly afterwards obtained a doctorate in mathematics in Budapest. At the age of twenty-four, he was the youngest lecturer at the University of Berlin and published several influential mathematical papers. In 1932, in his book Mathematical Foundations of Quantum Mechanics, he showed that Pauli's matrix mechanics and Schrödinger's wave equation are equivalent and proposed a mathematically elegant relativistic synthesis, which, however, was not able to prevail among physicists against Dirac's approach formulated at the same time. From 1929, he commuted between Europe and the USA and, after the Nazis came to power in 1933, remained as Professor of Mathematics at the Institute for Advanced Studies in Princeton, where Einstein had also emigrated. In the Manhattan Project, he played a key role in the development of the plutonium bomb and sat on the target committee for the atomic bombs to be dropped on Japan. In the 1940s, von Neumann designed key aspects of today's computer architectures and programming techniques — he is regarded as one of the founders of computer science.

In 1954, he was appointed to the five-member Atomic Energy Commission (AEC), where he was responsible for science. In this role, together with the influential solid-state physicist Frederick Seitz (who after his retirement became a highly paid denier of tobacco damage and climate change), he developed the concept for an AEC-funded interdisciplinary materials research center at the University of Illinois which was meant to serve as a model for more institutions of this kind. His death in 1957 prevented it from being realized, but the idea was soon taken up again.

The Soviet Union's success in launching Sputnik, the first satellite to orbit the earth, on October 7, 1957, triggered profound fears in the USA of its rival's technological superiority. The Cold War opponent not only had the prospect of developing new earth observation and communication systems, but had also developed launchers that could hit any target on earth with nuclear bombs. In response, President Eisenhower founded the Advanced Research Project Agency (ARPA, later DARPA) in 1958, which identified the development of suitable materials as an important constraint for space and military technologies.

With the success of the Manhattan Project, basic research in physics was now recognized as the leading science for scientific and technological progress. The aim was now to raise the traditional pragmatic, technically oriented materials sciences to a scientific level. This required not only a combination of basic research and empirically based engineering disciplines, but also the training of young scientists who could think in an interdisciplinary way and combine different approaches. For this reason, the decision was made against central research centres, such as those established in the Manhattan Project, and in favour of a network of interdisciplinary research and teaching facilities at universities, as envisioned by von Neumann. By 1972, ARPA had established twelve such facilities. Other departments at many universities joined.

Following the invention of the transistor, increasingly intensive efforts to produce high-performance semiconductors for ever-smaller electronic components played a driving role in materials science. In 1953, Siemens succeeded in producing the first single crystal of silicon — much more suitable for microelectronics than the previously dominant germanium due to its larger band gap (voltage difference between the relevant electron orbitals). Producing high-purity, flawless crystals, understanding the effect of specifically introduced impurities (doping) and realizing ever smaller structures required pioneering work in materials science and technology. In 1971, the first industrial microprocessor, the Intel-4004, became available. The electronic structures incorporated into it were only 10 micrometres in size.

By the end of the hot phase of the Cold War, this had created the key conceptual and institutional prerequisites for the development of a theoretically sound materials science that could bring together all relevant disciplines, perspectives and areas of experience. In practice, however, there was still a long way to go. During early computer development, the concepts of quantum theory could only serve as a guide, as the complicated equations could not yet be solved for such complex systems. Different disciplines with their different perspectives and very differently structured pragmatic experience were working side by side, but at least they were able to talk to each other using new shared methods. In the next instalment, we will see how the picture fundamentally changed in the following decades and completely new approaches emerged, which had far-reaching consequences, not least for battery development.

Interessant, bin gespannt, wie‘s weitergeht. Grüße Ari